Why Hong Kong must embrace causal AI, the new reasoning intelligence

- The analytical capability and transparency inherent in causal AI could give Hong Kong a solid advantage in AI deployment and governance

Questions have also been raised over the blurring of boundaries between humans and machines, as well as the ethical implications of machines with unbridled decision-making capabilities.

The field of causal AI is poised to play an increasingly important role in developing more trustworthy and valuable systems, and has shown impressive capabilities across domains. The methodology holds significant promise for high-stakes applications, such as in healthcare, finance and public policy, where “explainability” and accountability are paramount.

However, causal AI represents a paradigm shift in the human-AI dynamic and can help address the significant concern that increasingly, AI systems will have limited or no human interaction.

Importantly, causal AI can endow other forms of AI with the ability to augment their decision-making and maintain human agency, with the AI system acting as a tool or executor, and humans as curators and decision-makers. This scientific methodology can significantly support regulatory and oversight mechanisms to ensure AI systems explain their decision-making processes and outcomes coherently and transparently.

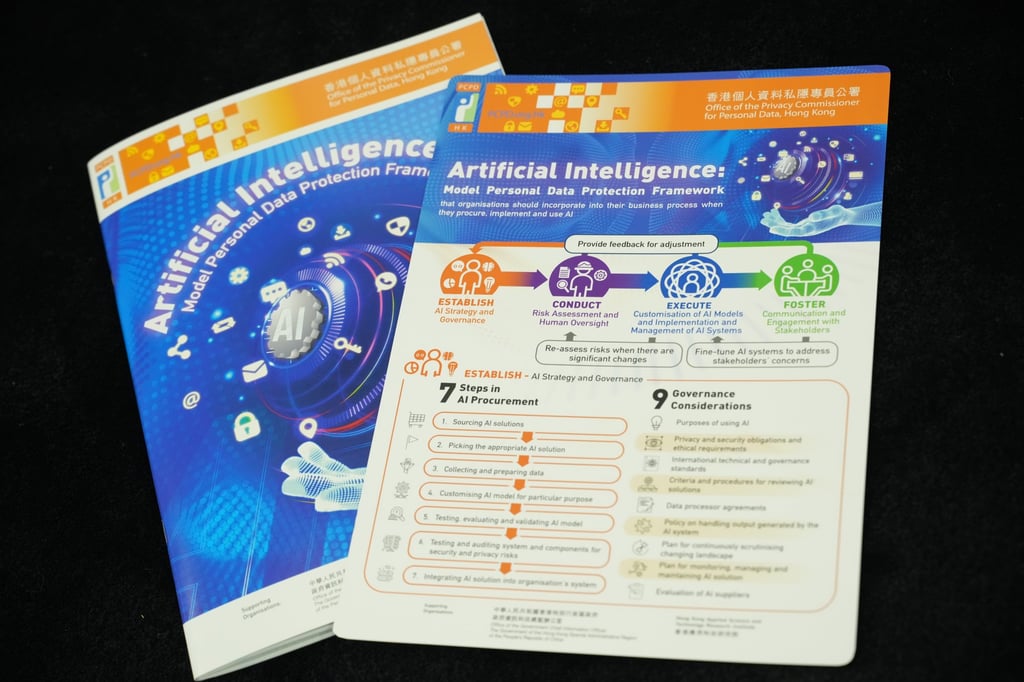

While regulators worldwide grapple with the intricacies of governing these emerging technologies, the European Union recently established a legal and regulatory framework with provisions coming into force over the next six months to three years.

By modelling the causal drivers of complex phenomena, causal AI can better detect anomalies, identify cyber threat sources and respond with greater agility. This capability is essential as AI increasingly integrates into critical infrastructure, financial systems and other high-stakes domains.

Worryingly, the annual cost of cybercrime is estimated to reach US$10.5 trillion by 2025, and consumers will ultimately bear the cost.

The discourse surrounding advanced technologies reflects their profound transformative potential and the urgent need to thoughtfully navigate their complex ethical, social and economic challenges.

By seizing the opportunity to establish a comprehensive, evidence-based approach to AI governance and regulation, Hong Kong can become a significant global player in the ethical and responsible use of these powerful technologies.

Dr Jane Lee is president of Our Hong Kong Foundation